Latent Variable Dialogue Models and their Diversity

This model increases the diversity of response variance by latent variable.

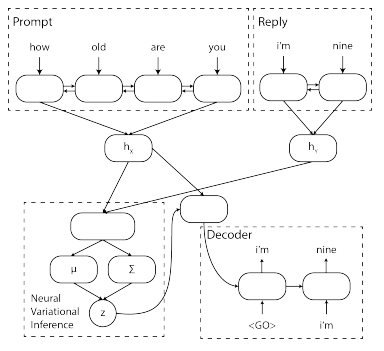

In 2017’s summer, I joined Prof. Kim’s undergraduate research program and started my first NLP research. As a beginner, I was in charge of writing a baseline of QA system which is presented in paper Latent Variable Dialogue Models and their Diversity. This model uses the idea of VAE in seq2seq QA system. It encodes the target question and target answer into a latent variable z and trained the posterior P(z|Q, A) so that it approximate the prior P(z) which follows Gaussian Distribution. This method can tackle the boring (deterministic) reply problem by encoding the variation into z. I implemeneted it with TensorFlow with my teammate.