Large-Scale Answerer in Questioner's Mind for Visual Dialog Question Generation

The project I did for my summer internship in Naver.

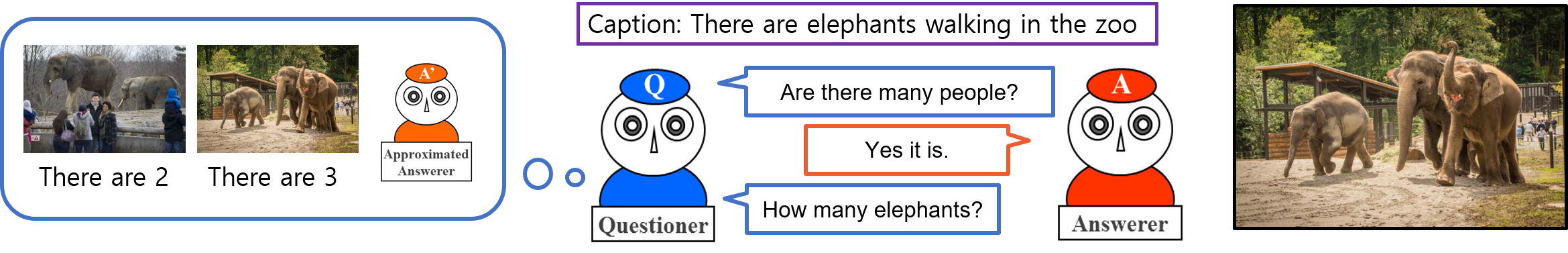

An illustration of our algorithm in GuessWhich game.

Visual Dialog is a novel task that requires an AI agent to hold a meaningful dialog with humans in natural, conversational language about visual content. In this project, we focus on a simple game called GuessWhich, a cooperate two-player game where one player tries to figure out an image out of 9,628 that another has in mind. At the beginning of the game, the questioner only knows the caption of a chosen image, while the answerer observes the image. Then the questioner tries to ask questions in the following 10 rounds and relies on the answerer to sort the images according to their possibilities. In our case, two players are both bots.

While previous papers usually use reinforcement learning to train the agents, we extended AQM, a newly proposed question selection algorithm, to a more complicated scenario where the searching space becomes infinity. It turned out that our algorithm, AQM+, can handle the situation well and outperform the baseline significantly. The paper is already accepted by Visually Grounded Interaction and Language (ViGIL) NIPS 2018 Workshop, and is still under review in Seventh International Conference on Learning Representations (ICLR 2019).

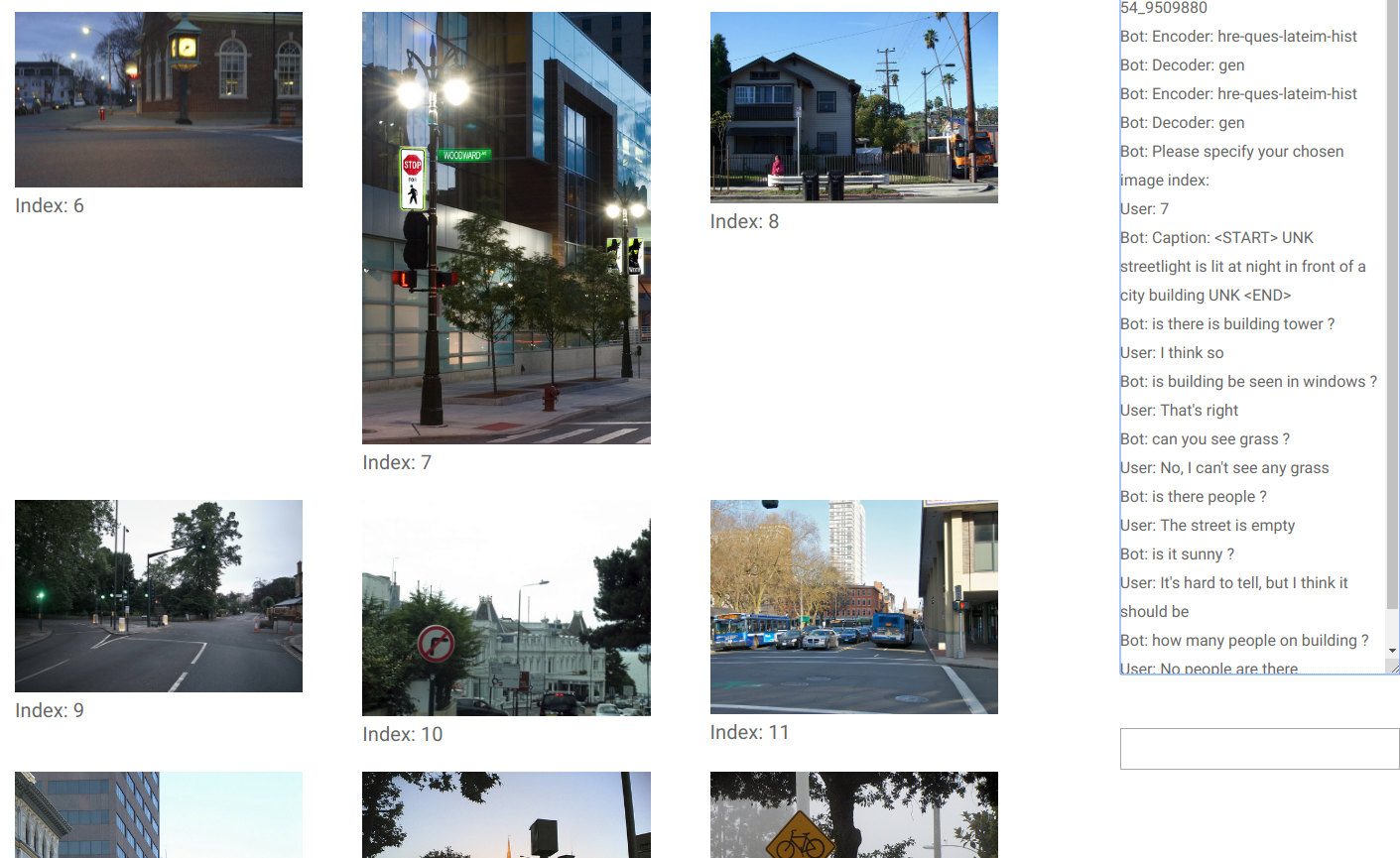

A demo of Bot-Human dialogue